I should mention that this post is written from the perspective of someone who wants a small file,

and still good quality, not perfect quality with zero filesize constraints. Therefore, much of what

I do here would not be ideal for a bloated release.

I knew that Cowboy Bebop was going to be a large encode from the beginning, so seeing output files

ranging from 250-500MiB or even 700MiB wasn’t terribly surprising, but when episode 23 completed and

I saw a size of 1.34GiB, I was a little worried. That’s about 4-5 times the average Judas bitrate.

There’s nothing inherantly wrong with a big file - I’m fine with episodes coming out at 450MiB

instead of 250MiB because crf is a quality based metric, and so if the file comes out large, then

it’s because x265 needed a few more bits to get a similar quality level. But, if a file comes out

that big, something’s obviously wrong.

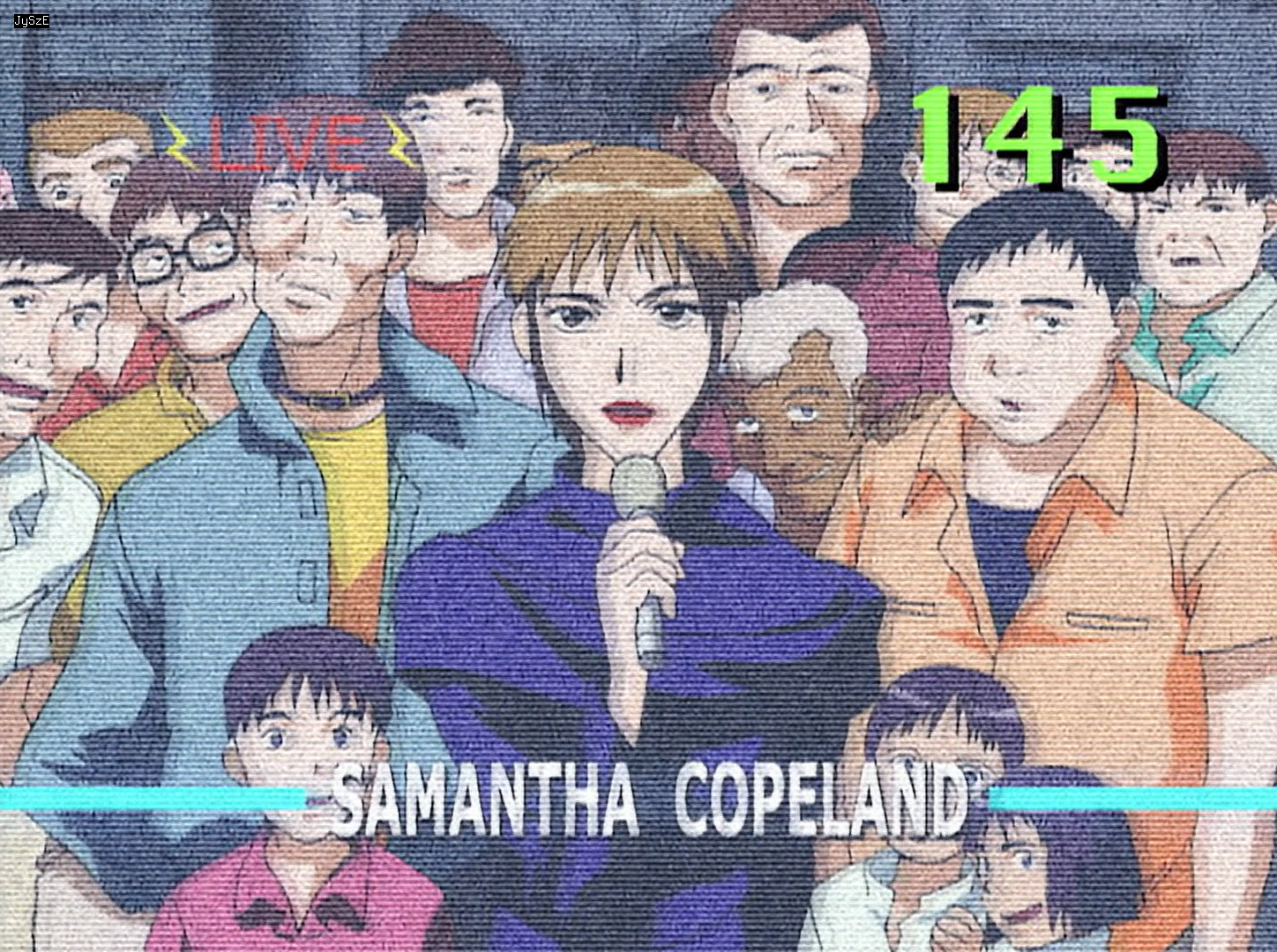

A quick glance at the source answered all questions I had immediately:

Let’s list the issues shall we?

- Strong dynamic grain ✓

- Weird horizontal lines that break motion estimation ✓

- Frames of pure static noise ✓

- Psychedelic effects with bright colours and quickly changing visuals ✓

x265 does not like any of this. At all. So, what can we do to get better efficiency?

The Dumb Method

Well, step 1 is just to try to make x265 compress more, after all, if the file is too big, the

simple solution is to ask x265 nicely to “make it smaller.” I’d already used some fairly strong

settings on bebop, so I just tried increasing the crf by 1 (18-19). This, rather expectedly, didn’t

do a whole lot, only reducing the file size from about 1.34GiB to 1.3GiB. Going further would likely

not have helped much either, only significantly reducing quality in non-fucked scenes (see

Tenrai-Sensei’s 912MiB CRF23 encode)

The real issue here is with the source, x265 is pretty good, but it’s not magic, and it won’t be

able to compress a video with all the issues listed above very well at all. I could always force the

bitrate to stay within a certain limit (say 9000kbps), but this will just cause the encoder to

destroy many frames that it can’t figure out how to get below this bitrate constraint.

Fixing the Source

So, we know what the issues are, how can we fix them? 3/4 issues aren’t really “fixable” as they are

just a part of the show: I can’t just cut out the scenes with this effect. However, we can at least

try to denoise it. The usual suspects (BM3D, knlm, ect.) aren’t much use here: they aren’t built to

deal with this sort of noise, and whilst at ultra high strengths they can work, they aren’t great.

CMDegrain

Generally, if you’re trying to get rid of dynamic grain, then the best bet is a temporal denoiser,

like CMDegrain. CMDegrain works best with high frequency noise (small dots), but at a high

enough strength it will basically get rid of any noise. The only problem is, the higher the strength,

the more motion blur it will cause.

Here’s what I ended up coming up with:

tv_cmde = eoe.dn.CMDE(

src,

tr=3,

thSAD=500,

thSADc=250,

refine=5,

prefilter=core.std.BoxBlur(src, 0, 6, 2, 1, 1),

freq_merge=False,

contrasharp=False,

)

Parameter Explanation:

- tr=3: Temporal radius. CMDE will consider up to 3 frames into the past and the future for motion

and denoising.

- thSAD=500: Denoising strength (luma)

- thSADc=250: Denoising strength (chroma).

- refine=5: Number of times to recalculate motion vectors. Since getting accurate motion vectors

from this video is extremely difficult, due to the horizontal lines, I recalulate

vectors as many times as is possible with integer mvtools using different block sizes.

This results in much better accuracy, with very little extra processing time.

- prefilter: Clip to calculate motion vectors from. I’m using a somewhat strong horizontal blur to

try to get rid of a lot of the grain, without touching the vertical lines. I would

generally use dfttest for this, but I was running out of cpu time.

- freq_merge=False: Don’t use the internal freq merge implementation (because I’m using custom

parameters later on, and it’s also slower)

- contrasharp=False: Don’t contrasharpen. Actually redundant here (since False is the default), but

I was experimenting with it earlier. Unfortunately, it doesn’t work well at all

due to the size and magnitude of the grain.

CMDegrain’s motion blur at a thSAD of 500, and subpar motion vectors due to the horizontal bar

effect, is pretty bad. My usual method to fix this is to do a freq_merge: simply a way to merge

two clips together using their frequencies as weighting, instead of simple averaging. This allows us

to extract the low frequencies (lineart) from the source, and merge them with the high frequencies

(noise) of the denoised clip. This effectively allows us to use as strong as a denoise as is

necessary, without causing huge motion blur.

Unfortunately, the noise in this episode is fairly low frequency, and therefore is difficult to get

rid of without also causing some level of motion blur. My freq_merge settings try to strike a

balence between motion blur and denoising:

tv_cmde = merge_frequency(

src,

tv_cmde,

slocation=None,

sigma=1024,

ssx=[0.0, 0, 0.03, 0, 0.06, 16, 0.15, 56, 0.2, 1024, 1.0, 1024],

smode=1,

sbsize=8,

sosize=6,

)

Parameter Explanation:

- src, tv_cmde: Sources to take low and high frequencies from.

- slocation=None: In order to extract frequencies from the x and y axis with different strengths, I

have to disable the internal slocation parameter.

- sigma=1024: Ultra high

DFTTest strength, used across the y axis. This means that all frequencies

are extracted from the denoised clip, and merged into the source clip, in the vertical

direction. Since frequencies are transposed against lines in the time dimension, this

means that all horizontal lines are copied from the denoised clip as is: i.e. the

horizontal bar effect.

- ssx=[…]: Frequency extraction strengths from 0 (lowest frequency) to 1 (highest frequency).

Since the noise in this episode is very large, I had to write a very precise custom

slocation to try to avoid motion blur without leaving all the noise in.

- smode=1, sbsize=8, sosize=6:

DFTTest processing parameters. Specified here as they give better

performance than the freq_merge defaults without reducing quality

by any significant amount.

For more information about how these parameters work, see the

DFTTest documentation.

DPIR

CMDegrain is pretty good, but not good enough, and so I decided to stack it with a much stronger

spatial denoiser - DPIR.

OVERLAP = 8

planes = split(src)

interleaved = core.std.Interleave([

core.std.Crop(planes[0], 0, src.width // 2 - OVERLAP),

core.std.Crop(planes[0], src.width // 2 - OVERLAP, 0),

core.std.AddBorders(core.std.StackVertical(planes[1:]), right=OVERLAP, color=32768)

])

processed = eoe.fmt.process_as(interleaved, partial(DPIR, strength=10, trt=True), "s")

left, right, chroma = processed[::3], processed[1::3], processed[2::3]

left = core.std.Crop(left, 0, OVERLAP)

right = core.std.Crop(right, OVERLAP, 0)

planes[0] = core.std.StackHorizontal([left, right])

planes[1] = core.std.Crop(chroma, 0, OVERLAP, 0, chroma.height // 2)

planes[2] = core.std.Crop(chroma, 0, OVERLAP, chroma.height // 2, 0)

dpir = join(planes)

Note: For whatever reason I didn’t have the latest dpir version when writing this, and instead I

used v1.7.1.

There’s quite a lot going on here, so I’ll break it down. The main processing is done here:

processed = eoe.fmt.process_as(interleaved, partial(DPIR, strength=10, trt=True), "s")

This calls DPIR on a 32-bit floating point (“s” stands for “single precision float”) version of the

clip (since DPIR only works on floating point clips).

Unfortunately, DPIR uses a huge amount of VRAM, and processing all three planes as is would use

about three times the amount of VRAM that I have addressable. DPIR has an internal solution to this:

tiling. The idea is to split the clip into tiles, and process each tile independently, one by one.

This effectively halves the amount of VRAM allocated. This does, however, cause an issue where the

border between the two tiles becomes visible after a strong denoise. I solved this by adding a small

overlap between the tiles, currently set to 8 pixels.

Finally, I also decided to denoise the chroma. I still didn’t have enough VRAM to do the chroma

planes seperately from the luma, but I realised that since the chroma planes are 1/2 the size of the

luma, I could easily stack them on top of each other, pad one side, and then interleave it with the

luma tiles. As long as the pad colour is neutral (32768 in this case), this doesn’t seem to cause

any issues near the borders.

So, to summarise:

- Split the clip into 2 tiles with an 8 pixel overlap

- Add the 2 chroma planes, stacked on top of each other, with 8 pixels of padding, to the tiles

- Interleave the three clips

- Run DPIR on the interleaved clip

- Split the interleaved clip back into three seperate clips

- Crop the overlap off of the left and right tiles

- Split the chroma tile back into two planes, cropping off the overlap padding in the process.

- Join the three planes back together

This effectively saves about 60% of the VRAM allocated by DPIR, without creating much extra

processing, and also without creating any new border/tiling artifacts.

Detecting the Horizontal Bar Effects

I’m pretty lazy, and generally follow the mantra of “If a task will take me a while to do,

I’ll automate it.” Most of the time this is a pretty stupid idea, and

whilst it can save extra work in the future (e.g. Vivy’s denoiser being reused later in the season

without me needing to do anything), It’s entirely unnecessary here since I know this issue only

persisted for one episode. Regardless, I couldn’t be bothered to go frame by frame and note down

frame ranges, so instead I’ll get Vapoursynth to detect the horizontal bar effects for me.

The horizontal bar effect present here might seem difficult to detect at first, but it’s actually

fairly simple. The bars are a constant “frequency,” and very prevalent, which means that they will

show up very clearly on a fourier transformation. I’m not going to explain exactly what a fourier

transormation is here, or how to read one, but you can read

the imagemagick documentation for a good overview.

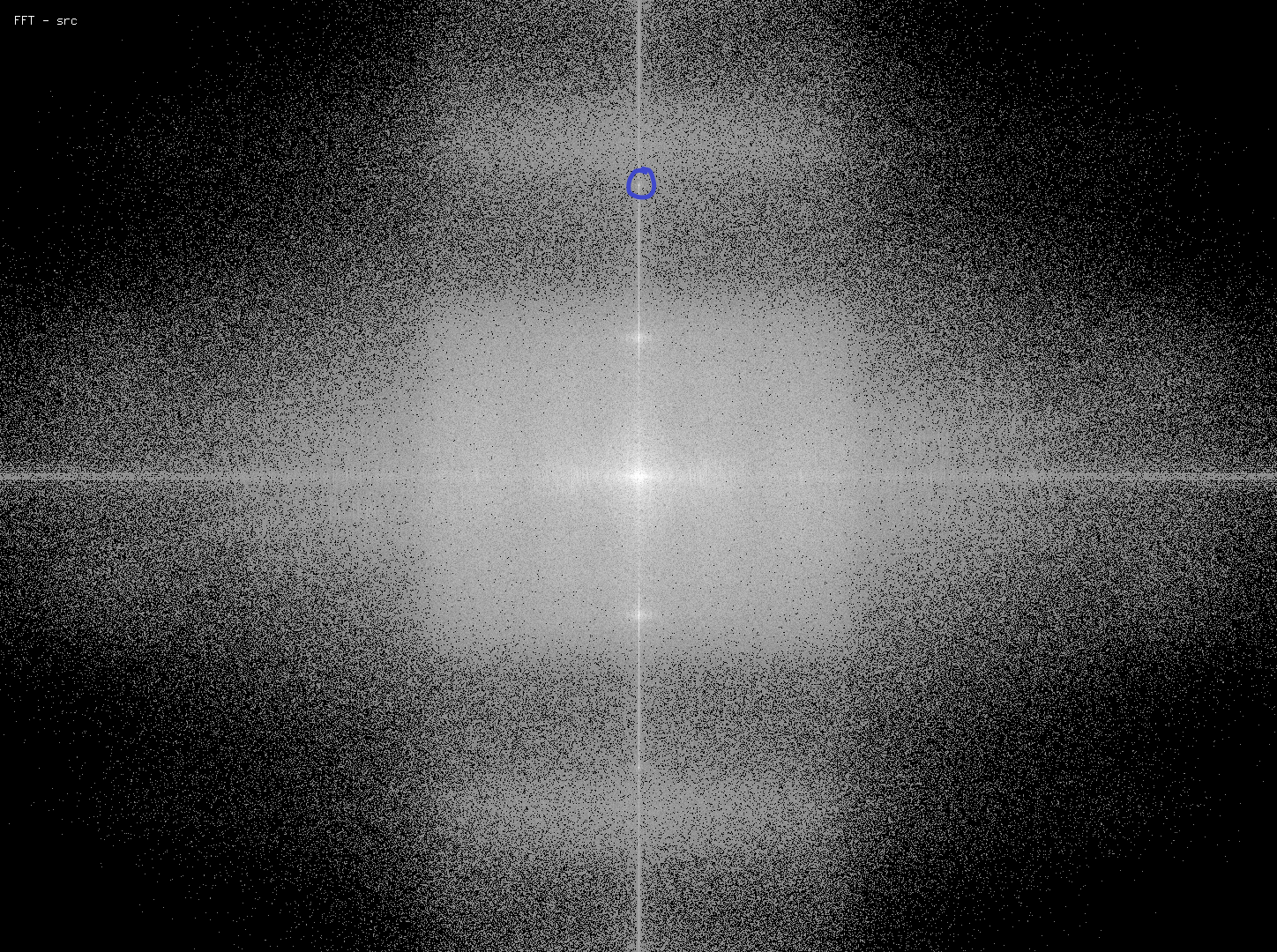

Here’s the fourier transformation of the same frame I showed above:

The cicled location corresponds to the frequency of the horizontal bar. On frames with the bars,

this will show up on the transformation as a white dot, like here. On normal frames, it will just be

a dark grey. We can then use this point as a reference to detect the horizontal bar effect:

# variable names changed for clarity

def choose_src(src, a, b, thr=180, location=(724, 210)):

def choose_func(n, f: vs.VideoFrame) -> vs.VideoFrame:

return a if np.array(f[0], copy=False)[location[1], location[0]] > thr else b

fft = core.fftspectrum.FFTSpectrum(get_y(clip), False)

return core.std.FrameEval(src, choose_func, fft, [a, b])

What this function does is, for every frame, get the FFT of the frame, and then check the value of

the point at the location specified. If it’s above the threshold, it will return the first clip,

otherwise it will return the second clip. This has a pretty much perfect accuracy for detecting the

horizontal bar effect. From there, we can simply use this function to decide which of the two clips

to use for any individual frame.

Since this also doesn’t depend on either of the two return clips, it also means that neither of them

have frames requested until after choosing which one we intend to use - which is a very good thing,

because DPIR is not fast, and this script would run at about 0.8fps for the entire thing if both

strong and weak denoisers were always running.

TL;DR, and summary

Cool denoising with CMDE and DPIR, custom freq_merge, and interesting detection code.

I think that overall this gave a much better result than just leaving the tv sections untouched.

This is obviously not as good quality as the original source, but I’d still say that it’s pretty

good, and the process of diagnosing this issue, and then coming up with a way to fix it was fun. The

only reason I encode really is because I just find it exciting to problem solve issues like this.

Hope you found this article interesting. As always, if you have any questions, feel free to contact

me on Discord: @End of Eternity#6292

Cheers, EoE

It’s been 2 years and one day since my first post here and I’ve done far less than I intended. This

is mainly due to my inability to write something that I am happy with, and so most posts get

deleted. Still, I am interested in writing about things, and people still ask me the same questions

over and over, so it would make sense to write a blog post to link those people to. Like this

question, for example.

How do I setup Vapoursynth?

I could just do the braindead answer and link to the docs,

which would make some sense, after all, these are the official installation instructions,

but they aren’t particularly helpful past getting Vapoursynth on your machine, and the applications list

is well out of date at this point (vsedit is now completely broken - F). So, in this post, I’ll

cover how I would set up a new machine for Vapoursynth script development.

I assume at least a basic understanding of the command line for most of this tutorial, but for those

who are new to this, if I say “run x,” I generally mean on the command line. This can be accessed

by Powershell (recommended) or cmd on windows. To open Powershell, use Win+X, and select “Windows

PowerShell.” For further reading, the mozilla

docs are pretty good.

Prerequisites

-

Windows 10/11

It’s not like Vapoursynth doesn’t run on linux distros or MacOS, I just don’t have a Mac, and

there are too many linux distros to cover. Many of the most popular ones have Vapoursynth on their

respective package managers. If you are really struggling, contact me and I can probably help.

-

Python 3.9

I hate python. I can never seem to get it to play nicely with anything. Probably a me thing. My

suggestion for installation is to run the installer (as admin), customise installation and select

“for all users.” Vapoursynth can be installed for just the user, but it’s caused me problems in

the past, and I know that this just works™. Also make sure to select pip so you don’t have to grab

it later.

I would suggest making sure all old Python installations are gone before installing 3.9. You can

have multiple python installs, and it can work, but I can’t ever get it to, so you’ll be on your

own.

As of today, the latest release of Vapoursynth (R57)

supports 3.9. You can compile it yourself for 3.10, but that’s outside of the scope of this post.

I will update the post when this changes.

-

Visual Studio Code

Any code editor with a built in terminal will work (for example, Atom), however I use vscode

myself, so will be using that for this guide.

Make sure to install the Python Extension!

-

pip

Not strictly necessary, however many packages are hosted on pypi, and pip just makes installation

of them easier. If you forgot to install it with Python earlier, run python -m ensurepip.

-

git

Also not necessary, but makes installation of packages not on pypi (like EoEfunc) easier to get.

Vapoursynth

Vapoursynth R57 can be downloaded from here

Run the installer as administrator, and just leave everything selected. Afterwards, go to the

console and run python -c 'from vapoursynth import core; print(core.version())'. You should get

details of your Vapoursynth installation printed to the console. If not, something’s gone wrong.

Plugins / Scripts

Vapoursynth can be used as is, vspipe should now be in your PATH (i.e. you can run vspipe), so you

can run a basic script, however you’ve got no extra plugins or packages, nor any way to preview

your outputs. You can browse VSDB for many plugins/packages that you might want,

but if you just want a quick way to download basically all the stuff you’ll ever need, feel free to

use the Judas Vapoursynth Install Script.

To run, just extract it, and then right click on install.ps1 and click “Run with PowerShell.”

Assuming you installed Python/Vapoursynth as described above, you should be able to leave the

installation paths as they are (hit enter twice).

Note that this is an internal Judas install script, so some of the stuff in here is held at an older

version than is available for compatibility reasons (e.g. lvsfunc, vardefunc). These can be upgraded

manually via pip should you wish to. It also assumes you have all the prerequisites above (except

vscode) installed already.

Previewing

Next, I’d suggest installing some way of previewing your scriots. My favourite previewer is Endill’s

Vapoursynth Preview. He hasn’t yet upgraded it to

work with the latest Vapoursynth version, so I’d suggest using Akarin’s fork.

To install, open the folder you want vspreview to be in (I use C:\PATH, but anywhere works), hold

shift and right click in the folder, and click on “Open PowerShell window here.” Now run

git clone https://github.com/AkarinVS/vapoursynth-preview.git. This will copy all the files from

the git repository into a new folder called “vapoursynth-preview.”

Now, we need to add vspreview as a launch configuration in vscode. Open vscode, and press F1, then

type “Preferences” into the search bar. Click on “Preferences: Open Settings (JSON)”. Paste the

following into the file that opens, replacing the “program” path with wherever you installed

vspreivew. Make sure to use double backslashes in the path so it doesnt break!

{

"files.associations": {

"*.vpy": "python",

},

"launch": {

"configurations": [

{

"args": [

"${file}"

],

"console": "integratedTerminal",

"name": "Vapoursynth: Preview",

"program": "C:\\Path\\To\\vapoursynth-preview\\run.py",

"request": "launch",

"type": "python"

},

],

"version": "0.2.0"

},

}

Now you should be able to press F5 to preview whatever script you currently have open. Any easy test

for this is below, save it as test.vpy and try pressing F5. You should get a window open with a

red frame. I’ve also included a file association for vpy to python so that you get syntax

highlighting.

My full vscode settings are available here, though

I warn you, it’s not organised and half the settings in there are old. If you do use them, you

should also install Pylance,

black (pip install black) and flake8

(pip install flake8), as well as Fira Code.

import vapoursynth as vs

core = vs.core

core.std.BlankClip(color=[255, 0, 0]).set_output()

All done!

Hopefully this has been helpful for anyone trying to setup an environment to start learning

Vapoursynth. Eventually I’ll write a proper post on learning to write scripts without it being too

hand holdy like my initial attempt. If you run into

any issues, my dm’s are always open (End of Eternity#6292).

Cheers, EoE

It has been exactly 4 months since the first installation of this little series of mine, however I’ve finally gotten the motivation to actually write something again. It’s not the numpy related post as I promised in part 1, however I can at least confirm that that is coming (see the vsnumpy overhaul in EoEfunc). In the meantime though, I wanted to talk more about using FrameEvals.

Firstly, I need to make a correction regarding the differences between FrameEval and ModifyFrame. I had originally said,

“I’m using ModifyFrame here only because it’s a little faster than FrameEval, but both are equally usable.” - Me, Writing Faster Scripts - Part 1

However, there is a fairly significant difference between the two. FrameEval expects a VideoNode to be returned, where ModifyFrame expects a VideoFrame. This means that if you don’t know the exact frame index to be returned ahead of time, you must use clip.get_frame(index) for ModifyFrame. I had originally thought this to be a non issue, however after reports of hanging by multiple people, this was obviously incorrect. Myrsloik (the Vapoursynth dev) said they think that python’s GIL causes this issue (IEW Discord), but regardless of what it’s caused by, it can cause a problem, and therefore we should use FrameEval instead, and accept that it’s ever so slightly slower. Having said that, with the new API4 changes, FrameEval may have gained the performance difference back anyway. Oh, and this doesn’t occur with FrameEval because it requests the frame internally instead, bypassing the python threading issues.

Anyway, enough about what mistakes I made, let’s talk about mistakes other people made instead, and how they can be avoided in future.

Writing a Good Fast FrameEval Function

The secret to a fast FrameEval is to do as little as possible within the function. What I mean by this, is taking as much of the logic as possible outside. Often this means you’ll end up with a single if statement within the eval function, which just determines one of two clips to be returned. The more logic you put into the FrameEval, the more that has to be done every frame, and remember, Python is slow.

Here’s an example, helpfully “provided” by LightArrowsEXE (IEW Discord)

# All further examples will assume these imports

import vapoursynth as vs

from functools import partial

from vsutil import scale_value, get_depth

from lvsfunc.misc import get_prop

core = vs.core

def auto_lbox(clip: vs.VideoNode, flt: vs.VideoNode, flt_lbox: vs.VideoNode,

crop_top: int = 130, crop_bottom: int = 130) -> vs.VideoNode:

"""

Automatically determining what scenes have letterboxing

and applying the correct edgefixing to it

"""

def _letterboxed(n: int, f: vs.VideoFrame,

clip: vs.VideoNode, flt: vs.VideoNode, flt_lbox: vs.VideoNode

) -> vs.VideoNode:

crop = (

core.std.CropRel(clip, top=crop_top, bottom=crop_bottom)

.std.AddBorders(top=crop_top, bottom=crop_bottom, color=[luma_val, chr_val, chr_val])

)

clip_prop = round(get_prop(clip.std.PlaneStats().get_frame(n), "PlaneStatsAverage", float), 4)

crop_prop = round(get_prop(crop.std.PlaneStats().get_frame(n), "PlaneStatsAverage", float), 4)

if crop_prop == clip_prop:

return flt_lbox.std.SetFrameProp("Letterbox", intval=1)

return flt.std.SetFrameProp("Letterbox", intval=0)

luma_val = scale_value(16, 8, get_depth(clip))

chr_val = scale_value(128, 8, get_depth(clip))

return core.std.FrameEval(clip, partial(_letterboxed, clip=clip, flt=flt, flt_lbox=flt_lbox), clip)

Since this is LightCode™, there’s a helpful docstring to tell us that all this does is select a clip based on whether or not it has letterboxing. It works (I think), though it could definately do with some improvements. Starting with the first line of the function, we can see our first problem.

crop = (

core.std.CropRel(clip, top=crop_top, bottom=crop_bottom)

.std.AddBorders(top=crop_top, bottom=crop_bottom, color=[luma_val, chr_val, chr_val])

)

That looks like logic to me! This could definately be moved outside of our eval function, since nothing here depends on anything we calculated inside of the FrameEval.

The next lines are very problematic, as they use the cursed get_frame method, which as we discussed earlier, can cause Python to hiss at you (actually it does nothing and hangs, which is probably worse).

clip_prop = round(get_prop(clip.std.PlaneStats().get_frame(n), "PlaneStatsAverage", float), 4)

crop_prop = round(get_prop(crop.std.PlaneStats().get_frame(n), "PlaneStatsAverage", float), 4)

There’s luckily an easy fix to this too, as all Light is doing with these frames is reading a prop, which is what FrameEval is built for. Any clips passed to the prop_src (third) argument of FrameEval have their frames passed to the eval function in the f parameter, meaning we can add these two clips to the prop_src. Light actually got halfway there, putting clip into prop_src, though he forgot to actually use it in the eval func.

Finally, this gets us to the only required bit of logic in this function, the bit that chooses which clip to return:

if crop_prop == clip_prop:

return flt_lbox.std.SetFrameProp("Letterbox", intval=1)

return flt.std.SetFrameProp("Letterbox", intval=0)

The only change needed here is that we can set the props for flt and flt_lbox outside of the FrameEval instead. This might seem like something that won’t affect performance at all, since setting a frame prop can’t take all that long, however the issue is that this filter will be reinitilised every time the eval func is called. Since Vapoursynth evaluates frames lazily (as they are required), there’s no harm in setting the frame props for both clips, before the eval, since the filter logic will only be called when the frames are requested. This goes for all filters too, meaning as long as you don’t need to change a filter parameter based on an individual frame, it will always be faster to take the logic out of the FrameEval.

After all of our changes, our modified function looks like so:

def auto_lbox(...) -> vs.VideoNode:

"""

Automatically determining what scenes have letterboxing

and applying the correct edgefixing to it

"""

def _letterboxed(

n: int, f: list[vs.VideoFrame], clip: vs.VideoNode, flt: vs.VideoNode, flt_lbox: vs.VideoNode

) -> vs.VideoNode:

clip_prop = round(get_prop(f[0], "PlaneStatsAverage", float), 4)

crop_prop = round(get_prop(f[1], "PlaneStatsAverage", float), 4)

if crop_prop == clip_prop:

return flt_lbox

return flt

flt_lbox = core.std.SetFrameProp(flt_lbox, "Letterbox", intval=1)

flt = core.std.SetFrameProp(flt_lbox, "Letterbox", intval=0)

luma_val = scale_value(16, 8, get_depth(clip))

chr_val = scale_value(128, 8, get_depth(clip))

crop = (

core.std.CropRel(clip, top=crop_top, bottom=crop_bottom)

.std.AddBorders(top=crop_top, bottom=crop_bottom, color=[luma_val, chr_val, chr_val])

.std.PlaneStats()

)

return core.std.FrameEval(

clip,

eval = partial(_letterboxed, clip=clip, flt=flt, flt_lbox=flt_lbox),

prop_src = [clip.std.PlaneStats(), crop],

)

Look how small our eval function is now! Pretty much everything was moved outside, leaving only the rounding of the frame prop, and the comparison to determine which clip to return. If I was really anal, I could rewrite PlaneStats to automatically round to the 4th decimal place, though I’m pretty sure Python can do that fast enough to not be an issue.

A Conclusion

The best thing about this is that there is no difference in output, and it didn’t require any clever maths optimisations or anything to be improved. All that really needs to be done to improve the majority of eval functions, is just a reordering of the logic, and some thought put into what actually needs to be done within it. I hope that this encourages more people put off in the past by the apparent “abysmal performance” of FrameEval to try writing their own functions with a little more care. Anyone who’s unsure about anything can always feel free to contact me (End of Eternity#6292) on Discord, best done through the IEW Discord Server.

Cheers, and see you “soon” for some Vapoursynth + numpy magic.

A couple of weeks ago, someone in the IEW Discord server mentioned that one of their scripts was running slow, and asked if I could take a look at it. Within their script was something like this:

The original (slow) code

insertion_frame_indexes = [...] # A couple hundred frame indexes to duplicate

for i in insertion_frame_indexes:

clip = clip[:i] + clip[i + 1] + clip[i:]

For those with less Python/Vapoursynth experience, all this is doing is duplicating the frames at the indexes specified in insertion_frame_indexes. Seems fairly simple right? If clip was just a list of integers, this would take a few milliseconds to run on any half decent hardware, but we have to remember that this is a Vapoursynth clip, and they work a little different.

Running this function with a blank 1080p clip on my machine takes around 1s to output the first frame, and then runs at a pretty miserable 200fps. Given that the blank clip generates at around a maximum of 2000fps for me, that’s a not ideal 10x speed loss. I managed to fix this with some more optimised code that ran at a solid 1600fps, which you can see below. This raised the question for some, ‘Why is just splicing clips together so slow?’

My improved code

# insertion_frame_indexes same as above

frame_indexes = list(range(clip.num_frames))

for i in insertion_frame_indexes:

frame_indexes = frame_indexes[:i] + frame_indexes[i + 1] + frame_indexes[i:]

placeholder = core.std.BlankClip(clip, length=len(frame_indexes))

def _insert_frames_func(n: int, f):

return clip.get_frame(frame_indexes(n))

clip = core.std.ModifyFrame(placeholder, placeholder, _insert_frames_func)

Taken from the lvsfunc channel in IEW

louis: how the f**k is modifyframe faster than splice

We’ll delve a little deeper here to answer louis’ question, proving why my implementation is so much faster.

Analysis of the original: Why was it so slow?

Whenever I run into a problem with code, be that it’s giving unexpected outputs, it’s running unreasonably slow, or it is just plain not working, often a good place I’ll look is at how the functions I’m using work internally. Let’s have a look at what our original code does underneath the pretty syntax.

Our main offending line is clip = clip[:i] + clip[i + 1] + clip[i:]. In python, using [] after a variable calls it’s __getitem__ method, and then the + operator calls the __add__ method. Using the implementation for these methods for the VideoNode class, we can see that the line roughly translates to the following:

# I have split this up onto multiple lines for readability.

first = core.std.Trim(clip, last=i - 1)

insert = core.std.Trim(clip, first=i + 1, length=1)

last = core.std.Trim(clip, first=i)

clip = core.std.Splice([core.std.Splice([first, insert]), last])

Already, we can see some simple problems with this section of code. For example, std.Splice can accept a list of clips, so the final line could become core.std.Splice([first, insert, last]) to be a little less bloated, however this hasn’t solved the root of the problem. The major issue here is the for loop. Because we execute this section of code for every insertion frame in the list, we’re essentially chaining hundreds, or even thousands of std.Trim and std.Splice calls.

Now, you might wonder why this is an issue: trimming and splicing clips can’t be all that intensive a task for your RGB gaming rig, so why does it go so slow? The problem is that Vapoursynth doesn’t just do the splicing at the very beginning, and then run the rest of your filters on the rest of the frames - it instead works quite differently.

How do Vapoursynth Filters Actually Work?

Each filter in Vapoursynth must implement a few functions, one of which being an initialiser which reads your parameters, and sets the video info for the clip that is output, and another to get a frame at some position. When you run a Vapoursynth function, all you’re actually doing is calling the initialiser: no processing of the video is (usually) done.

This is generally ideal. Vapoursynth is a frame server: we only want the frames to be processed and given to us when we want them, otherwise we’d be waiting hours before being able to get the first few frames of video. However, in this case it does shoot us in the foot.

For the Trim and Splice functions, we can take a look at the source code to see what’s happening. Both filters are obviously very simple, but they both have to request and return each frame they need within their respective GetFrame functions. This has only a little overhead per individual filter, but when you’ve chained hundreds together, that starts to add up.

The tl;dr here is that the original couple lines of code expands to a very large number of functions, each of which depending on the last, in order to get the next frame. Requesting even just one frame creates a massive dependancy chain which hogs both CPU time and memory.

Making a faster function

We already know what the output clip should look like - so there’s no need to call lots of functions and force Vapoursynth to create a massive filterchain that’s just going to hog your CPU time. Instead, we can just be a little more clever in how we stitch our clip together, to make it happen in just one function instead of one thousand.

Let’s go through my original function line by line to see how it runs much faster.

# Firstly, we create a list of frames in the original clip.

frame_indexes = list(range(clip.num_frames))

# Next we perform all the logic in duplicating the frames.

# This is actually just copy pasted from the original code,

# but since this time we're using python lists, it's

# significantly faster, and doesn't create a long dependancy

# chain of filters that Vapoursynth will have to deal with

# later on. We can then use this as a mapping between the

# index of a frame in the new clip, and the old clip

for i in insertion_frame_indexes:

frame_indexes = frame_indexes[:i] + frame_indexes[i + 1] + frame_indexes[i:]

# We have to create a placeholder clip with the length, and

# format of the original, so that we request the right

# amount of frames from our later `ModifyFrame` function,

# and so later Vapoursynth filters know how to work with our

# clip.

placeholder = core.std.BlankClip(clip, length=len(frame_indexes))

# This is the function we'll pass to `ModifyFrame` that will

# actually request the correct frame at each index. I'm using

# `ModifyFrame` here only because it's a little faster than

# `FrameEval`, but both are equally usable.

# `f` here is unused, however `ModifyFrame` will still pass

# it anyway, so it must be left in order not to error out.

def _insert_frames_func(n: int, f):

return clip.get_frame(frame_indexes(n))

# Finally, we actually run our insertion function over the

# placeholder clip, and get back exactly the same thing as

# before, only this time in a single function, and multiple

# times faster. Neat.

clip = core.std.ModifyFrame(placeholder, placeholder, _insert_frames_func)

What We’ve Learnt

Overall, the final function is actually really rather simple - but fast code doesn’t need to be complex. What’s important here, is recognising how Vapoursynth does the processing internally, and working with it’s strengths. This doesn’t really follow the Zen of Python’s rule of “There should be one– and preferably only one –obvious way to do it,” but in my opinion this is still a readable, and possibly more explicit way to do the original function.

If you only took one thing away from this little talk of mine, then it should be to look closer at the Vapoursynth source. Don’t just assume that the obvious way is the fastest - figure out why it’s not, and then come up with something better. Python’s pretty powerful - and with FrameEval/ModifyFrame you can do some wacky stuff without needing to write a full plugin.

For my next post in this topic, I’m planning on talking about using numpy for speed boosts, and prototyping new functions in a nice and simple Python script. If you’ve got any feedback at all, please send it my way on Discord. I can be found hanging around on the IEW Discord server, or alternatively you can just leave an issue on the Github repo where this project is hosted from.

This took far longer than it should have

I got an email from Porkbun recently telling me that it’d been a year since I bought this domain (along with a couple other unused ones). Funny how quickly it’s gone, considering covid and all, though I am disappointed to have only managed two posts, one of which being a “Hello World.” Therefore, with the renewal of the domain lease, I’m going to try to renew this blog as well; and I have a few topics in mind.

The ‘Basics of Vapoursynth’ kind of post that I was originally going for was really kind of awful to be honest - whilst there aren’t any in depth guides that go over that stuff, it’s not particularly interesting to write that sort of garbage anyway. Instead of that, I plan now to talk mainly about issues I’ve run into whilst working with video, and Vapoursynth, and how I’ve found ways around those issues. This includes my thoughts about writing faster python scripts for Vapoursynth, explaining scripts that I have written for notoriously difficult shows like Steins;Gate, and my first attempt at porting an old, but still useful plugin (CCD).

If anyone else has anything they’d like me to talk about, feel free to contact me on Discord (End of Eternity#6292). I would advise you to email me, but the mail redirection still isn’t working and I haven’t been bothered to figure out why. Maybe later ¯\_(ツ)_/¯